During my work with VMware products I always needed a platform to test new products, updated versions and software dependencies. In the past I used the virtual environment of my employer to deploy, install and configure the necessary systems. This always had a large downside: Me and my colleagues used the same environment to perform our tests so we had some conflicting systems now and then.

For the past couple of months, I have been experimenting with different lab setups, but nothing really satisfied my needs. So I decided to approach my homelab design the way I would approach any other client design: collecting requirements. (Even if it may not correspond to the level of detail of a real customer design.)

Conceptual Design

Here is the list of the central requirements for my homelab:

- R001: Small physical footprint.

- R002: Low energy consumption.

- R003: Low noise level.

- R004: Powerful enough to handle different use cases at the same time:

- VMware vSAN (including All-Flash, multiple disk groups and automatic component rebuilds)

- VMware NSX (including NSX DLR and NSX Edges)

- VMware Horizon (including virtual desktops)

- R005: Portable solution for customer demonstrations.

- R006: Isolated environment without dependency or negative impact to other infrastructure components.

- R007: IPMI support to power it on and off remotely.

Logical Design

To have the automatic rebuild of components (see R004) with vSAN even in case of a host failure, at least four nodes are required. Regarding R001, R002 and R003, a full sized 19-inch solution with four physical servers is not an option. Regarding the 10Gbit/s requirement for vSAN All-Flash (see R004), a solution consisting of several mini PCs is out of the question. Also, such a solution is not ideal for regular assembly and disassembly (see R005) due to the different components. To avoid dependencies on infrastructure components (see R006) despite the different use cases (see R004), a completely nested environment including AD, DNS and DHCP behind a virtual router seems to be the best option to me.

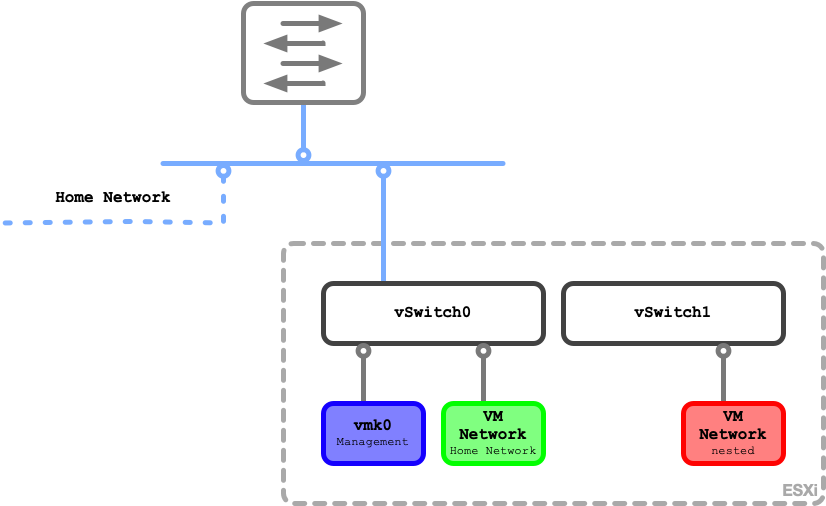

The network design of the physical ESXi host is as simple as possible and as complex as necessary. I configured two vSphere Standard Switches (vSS). The first one (vSwitch0) contains the VMkernel NIC enabled for management traffic and Standard port group for the VMs which need a connection to the Home network. vSwitch1 handles all the nested virtualization magic.

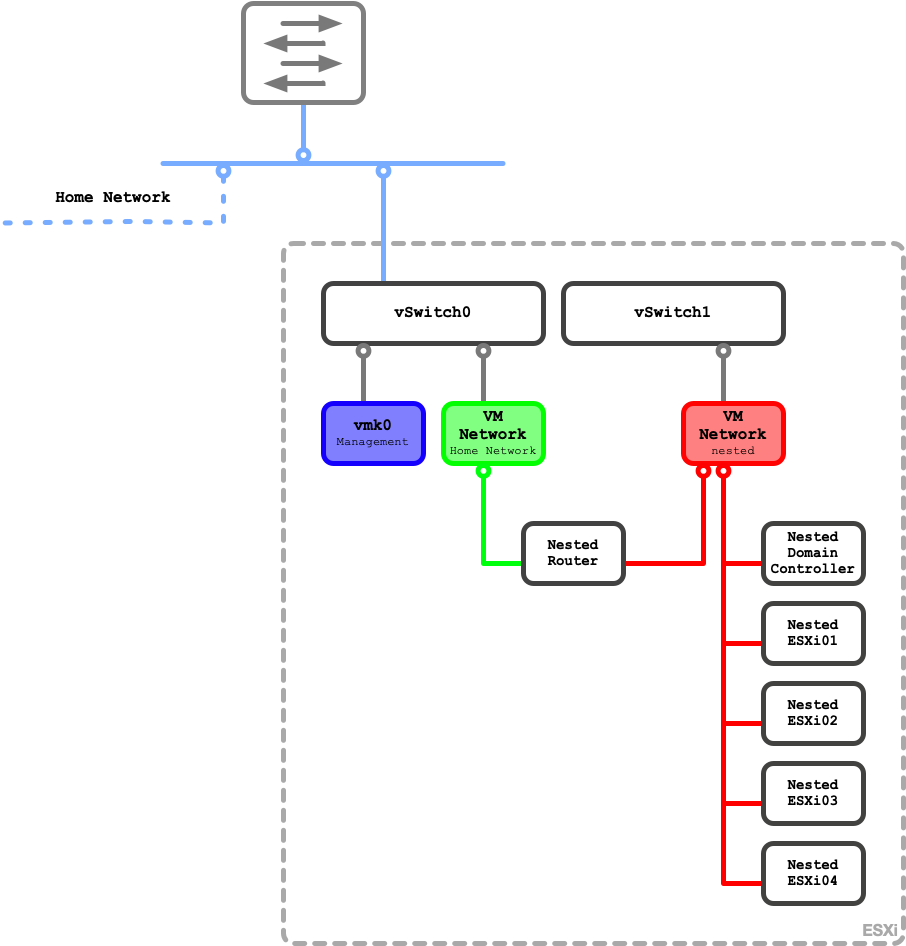

To do so I configured this vSS to accept promiscuous mode, MAC address changes and forged transmits. I also configured it for jumbo frames (MTU 9000) so I can use it for the VXLAN overlay networks using VMware NSX-V. All VMs which form the nested environment attach to vSwitch1.

Six VMs are deployed natively on the physical ESXi host. The first VM is a virtual router which acts as gateway to the outside world for the nested systems. It is configured with two network interfaces. One facing the Home network while the other connects to the nested environment. The second VM is a domain controller running AD, DNS, DHCP and ADCS. The remaining four VMs are the nested ESXi hosts which later form the vSphere cluster for all other workloads. The nested router and the nested domain controller can be reverenced as „physical“ workloads running outside of the virtualization.

Physical Design

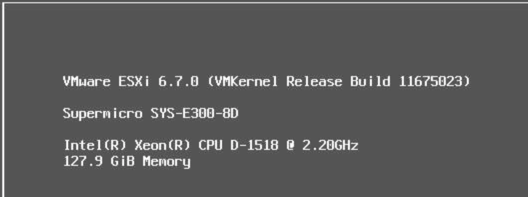

I went for a single Supermicro SuperServer E300-8D as a starting point. This not so small beauty convinced me by the good initial values but also by the numerous Expansion possibilities. I replaced the two out of stock fans with Noctua ones and also added a third one for better overall cooling.

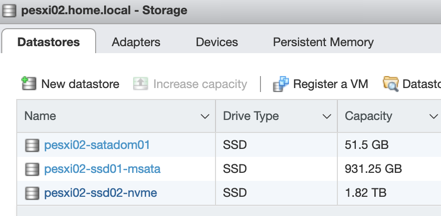

I was able to reuse most of the components from my homelab experiments and a nice system with 128GB RAM, a 64GB SATADOM device, a 1TB mSATA SSD and a 2TB NVMe SSD came together.

For all who are interested, here is the complete bill of materials of my current homelab configuration.

| Item | SKU | Quantity |

| Supermicro SuperServer E300-8D | SYS-E300-8D | 1 |

| Noctua premium fan 40x40mm | NF-A4x20 PWM | 3 |

| Kingston ValueRAM 32GB | KVR24R17D4/32MA | 4 |

| Supermicro SATADOM 64GB | SSD-DM064-SMCMVN1 | 1 |

| Samsung 850 EVO mSATA 1TB | MZ-M5E1T0BW | 1 |

| Samsung PM983 NVMe 3.84TB | MZ1LB3T8HMLA | 1 |

Wrap-Up

With this space and power saving configuration I have enough resources to run a nested 4 node vSAN cluster that uses NSX-V to run VMware Horizon on it. In one of my next posts, I’ll go deeper into the configuration of nested ESXi hosts and the associated implementation of VMware vSAN.

3 thoughts on “Building a nested VMware homelab”

Comments are closed.